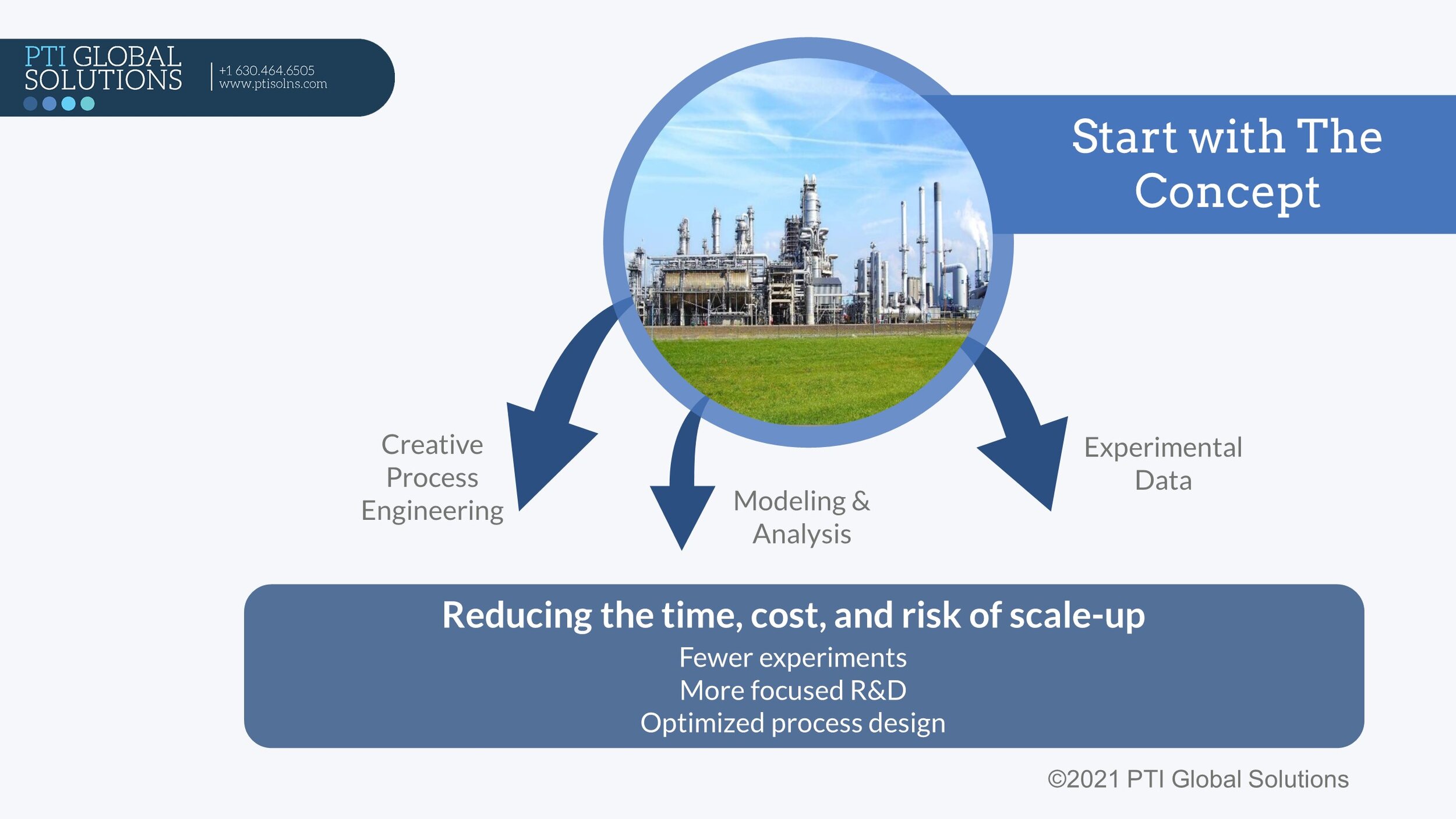

In today's rapidly evolving technological landscape, measuring performance is essential for sustainable technology innovation. Applying science and engineering principles to new problems drives progress and ensures technical and economic viability, while ensuring more sustainable solutions to today’s problems. Metrics provide a structured way to track these criteria, validate new technology against the competition, and report out to key stakeholders. These metrics help us evaluate reaction and separation tradeoffs, optimize reactor systems, and ensure that our processes are both economic and sustainable. Some examples of key metrics include:

Reactors are the heart of any chemical, biological, or electrochemical process, and scaleup of new reactor systems have their own subset of critical metrics. By rapidly transitioning from small-scale to pilot-scale reactors, we can gather valuable data that can be used to scale directly to commercial production. Key design metrics such as mass transfer rate (kLa) and weight hourly space velocity (WHSV) are essential for optimizing reactor performance and ensuring economic viability, and can be used as scale independent parameters to track performance at any scale. For instance, for many gas/liquid reaction systems, including gas fermentation systems, kLa (mass transfer coefficient) is evaluated to set minimum targets for commercial design, ensuring that the mass transfer rate is sufficient for economic viability. Similarly, WHSV (weight hourly space velocity) is a key measure of liquid flow per unit of catalyst, which is crucial for reactor performance.

Metrics used for design, scale-up, and operation of reactor systems in thermochemical and bioprocessing systems can include:

Case Studies and Examples

To illustrate the practical application of these concepts, let's look at a few case studies:

Sustainable Bio-based base oils: Base oils are typically produced as a petrochemical fraction, and are used to produce lubricants, greases, and other heavy oils for industrial use. In this example, metrics for reactor scale-up were applied to develop sustainable bio-based replacements for petrochemicals. The key scale-up metric used was the Reynolds Number, a dimensionless parameter that describes properties for both the liquid phase and gas phase over a solid catalyst. Using standard guidelines for Reynolds number correlations in trickle bed reactor systems, this ensured that we could identify reactor conditions in the regime known to promote sufficient mass transfer for good performance.

2. Biobased Monomer Production: Another example involves the production of a biobased monomer. The process rapidly transitioned from a 50 mL to a 1500 mL pilot scale, achieving a 30x scale-up. The pilot results could then be used to scale directly to commercial production. In this case, the key scaleup parameter was weight hourly space velocity (WHSV), a parameter than determines the catalyst volume needed for a given reactor performance. The table below shows a typical scaleup plan that could be used for this type of scaleup problem.

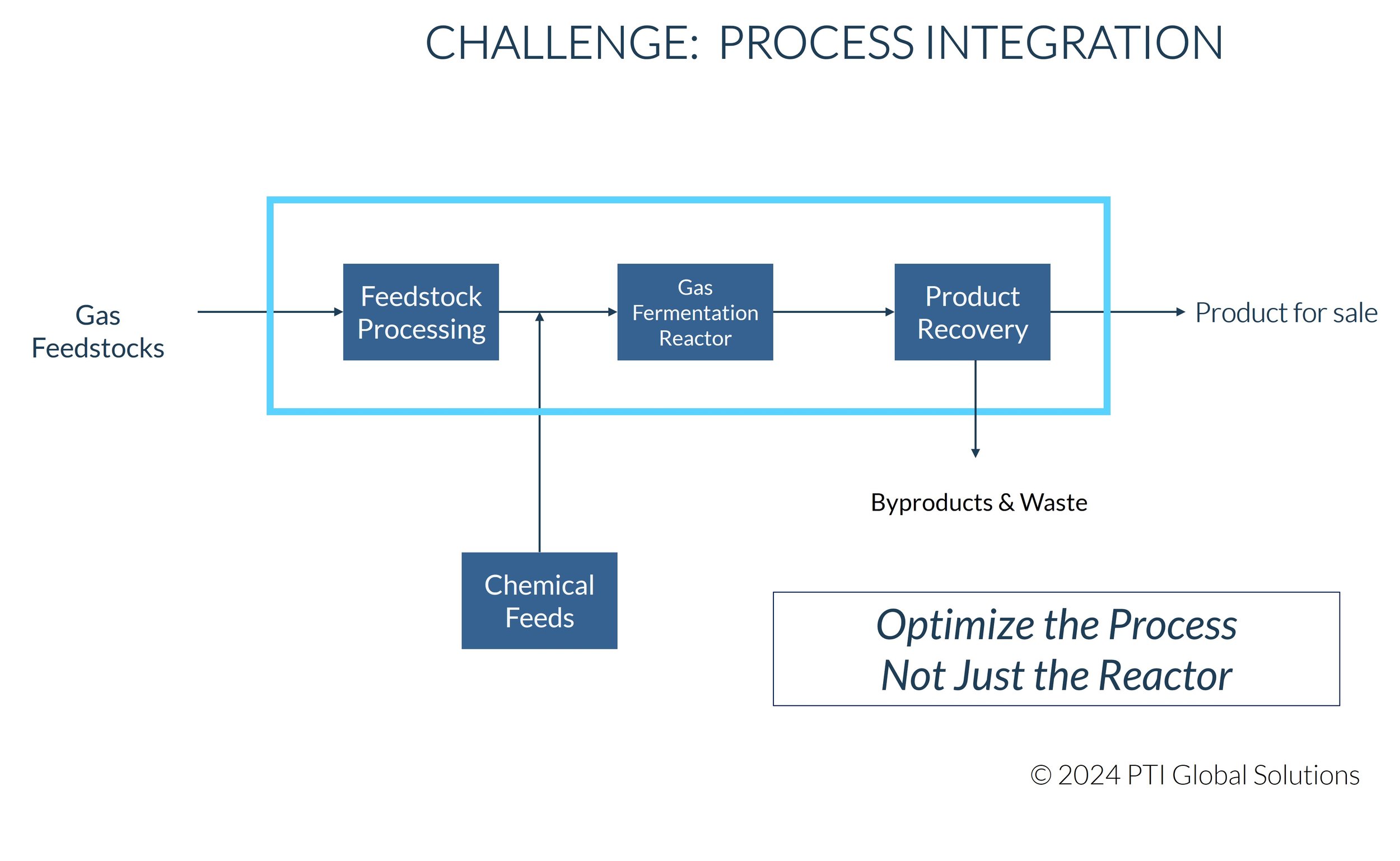

3. Novel Bioreactor Design: In this case, a novel bioreactor was designed for gas fermentation. The key design metric, kLa (mass transfer coefficient), was used to set performance targets and design the equipment. Published correlations were used to evaluate the mass transfer performance vs the superficial velocity of the gas phase through the reactor system. The minimum reactor performance determined the reactor design parameters necessary for an economic process design.

In conclusion, metrics play a pivotal role in the successful design, scale-up, and optimization of sustainable technologies. The case studies presented in this document illustrate the practical application of these concepts, demonstrating how metrics can guide decision-making and ensure that our processes are efficient, economical and sustainable.